Photonics: Moore's Law and Computing at the speed of light

History of Computing:

Until the 20th century, computers referred to the people who did computation, namely scientific calculations, by hand. As with anything humans do, computing by hand was also prone to error and inefficiency. Computations had to be verified multiple times as they were used in mathematical tables used for farming and planting, exploration and research, as well as navigation, especially on the high seas or even launching missiles to attack an enemy at war.

A small error anywhere would compound leading to an enormous cost. The challenge was to replace humans with machines that compute mathematical equations perfectly every single time. Thus promoting humans to do the creative task of programming and leaving the mechanical aspect to machines. The birth of computers in the modern form can be credited to Charles Babbage and Ada Lovelace who invented the following respectively:

Difference Engine - automatic mechanical calculators designed to tabulate polynomial functions

Analytical Engine - the first design for a general-purpose computer incorporating an arithmetic logic unit, control flow and integrated memory.

But it was only in the mid-20th century did Alan Turing envision something powerful. The universality of computation or Turing-completeness. The fundamental principle is as follows:

Universality of computation entails that everything that the laws of physics requires a physical object to do can, in principle, be emulated in arbitrarily fine detail by some program on a general-purpose computer, provided it is given enough time and memory. - David Deutsch

It is this principle that can be used to argue that building an AGI is possible and that universal quantum computer, proposed by Deutsch, as the true universal computer, a topic of discussion for another day.

Traditional Computers:

One of the key elements of a computer is understanding information. While humans communicate with decimal systems and the alphabet, computers are encoded with binary systems i.e 1 and 0. With these two numbers, all other numbers and characters(ASCII) are created and represented. But how does a traditional computer operate? By controlling electricity or rather the electrons.

Communication between two systems is merely sending pulses of electricity by treating a computer as a switch i.e when electricity flows, the switch turns “ON” (represented as '1') and when electricity is stopped, the switch turns "OFF" (represented as '0').

To conduct this switch operation, early computers were made up of something called thermionic valves, aka vacuum tubes. The principle behind it was thermionic emission: heat up a metal, and the thermal energy knocks some electrons loose. Computers made of vacuum tubes used to be behemoth and fill up the size of huge rooms. But today more powerful computers can fit into our pockets, thanks to transistors. A computer usually has billions of transistors and may seem complex but its purpose is simple: To perform computations through layers of abstraction.

ENIAC. Credits: Computer Hope

The 3 primary building blocks of a modern computer are:

Transistor: a semiconductor device, usually made of Silicon (remember Silicon Valley?), used to amplify or switch electronic signals and electrical power. A transistor is a 3 pin device or has 3 parts:

information comes into a transistor’s source.

then it travels through a channel/switch called the gate

and finally outputs through the drain, alternatively we could call that storage or memory; sometimes that translates as input to another transistor

Transistors are used to build logic gates and boolean circuits to perform computations

Resistor: a device that “resists”, regulates or sets the flow of electrons (current) through them by using the type of conductive material from which they are composed,

Capacitor: a component that has the “capacity” to store energy in the form of an electrical charge, much like a small rechargeable battery.

The other building blocks include amplifiers, mixers, inductors and diodes.

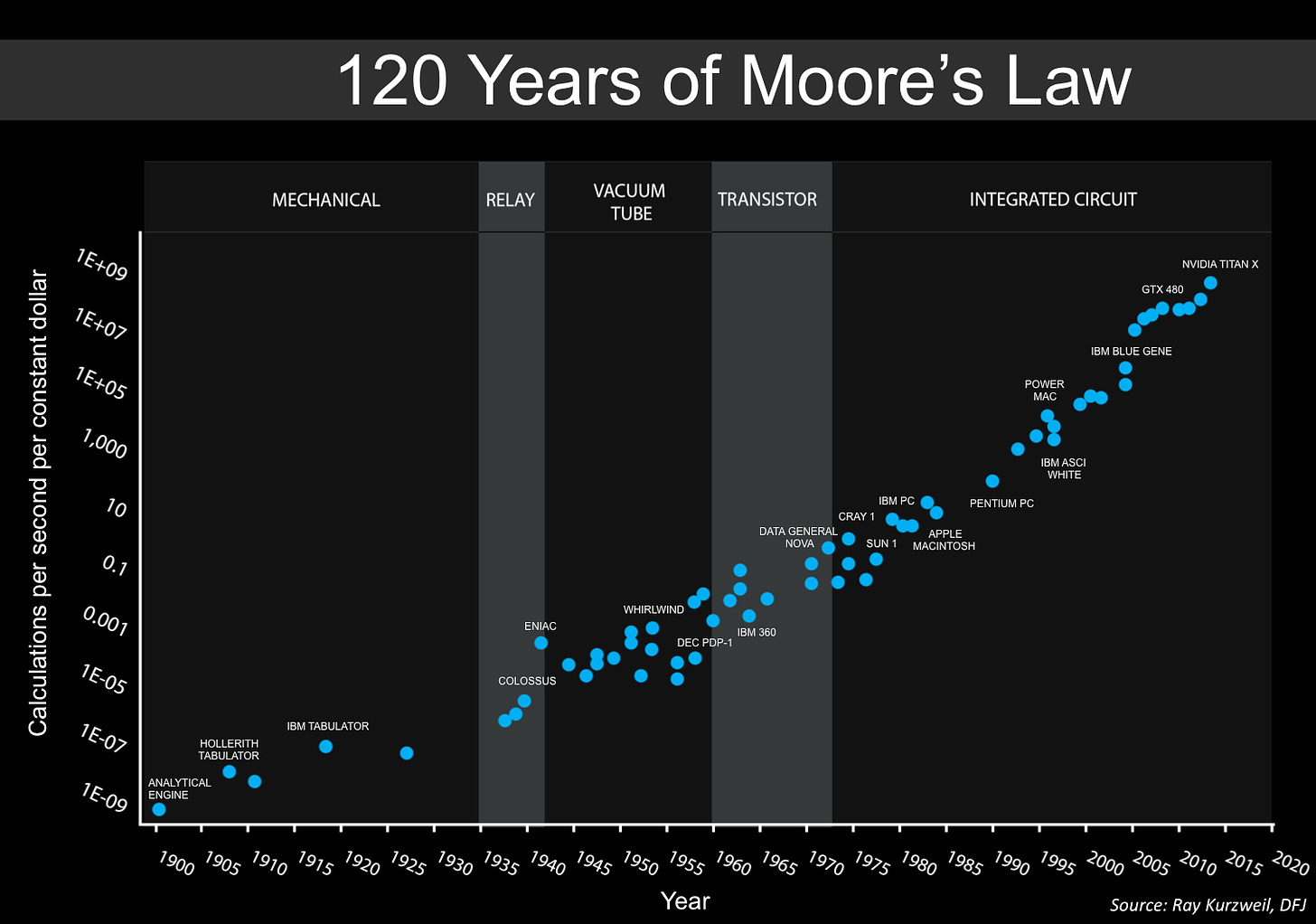

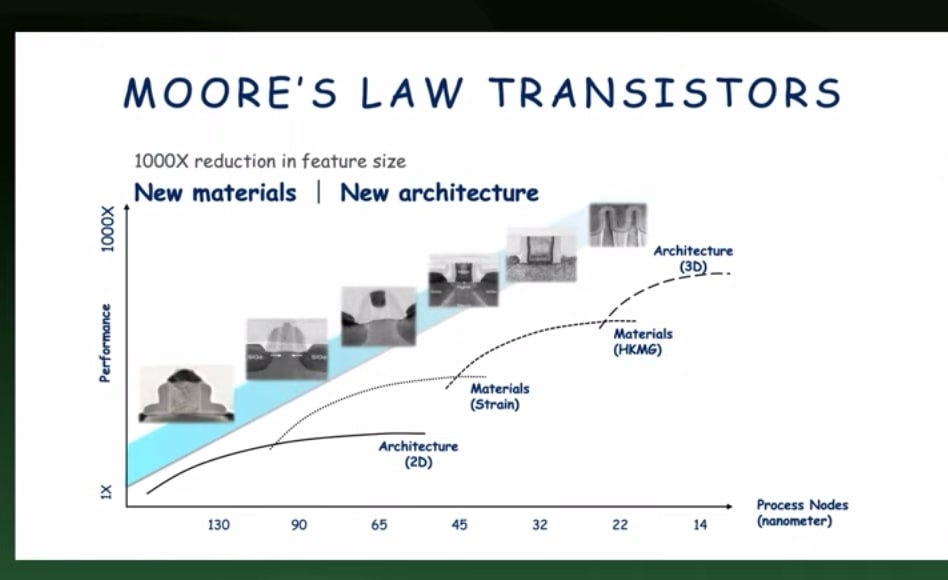

Combining these building blocks into electronic circuits based on logic units on a single chip gave us the integrated circuit or IC. And ever since, dozens of components could be integrated into a chip, and then hundreds, thousands and millions. Gordon Moore, the founder of Intel, observed that the number of components being placed on a chip was doubling roughly every one to two years, which came to be known as Moore's law. Today, a chip holds billions of transistors. All the progress in the amount of data that is computable and ML applications that are on a rise can be attributed to Moore's Law. Many a time in the past, Moore's law was claimed to be approaching death. Although the law isn't dead, many claim it has been undergoing a steady decline.

Is there a physical limit to how many silicon chips we could integrate together? To get an approximate sense, the manufacturing process of a chip is often expressed as 90nm, 65nm, 40nm, 28nm, 22nm and 14nm. The latest processes include 7nm and 5nm while 3nm is also under research. But wait, what does this mean? The size '90 or 7' nm is just the name of the new generation and doesn't equate to any chip size but it indicates the increasing density of transistors that can be packed together. For example, 7nm tech packs about 100 Million Transistors per mm^2 and 5 nm packs somewhere between 130 - 230 Million transistors.

As of 2017, transistors were about 70 silicon atoms wide. Accounting for a silicon atom's size which is about 0.2nm, Jim Keller believes a transistor could go up 10 atom x 10 atom x 10 atoms, offering a 10-20 year runway. Below which quantum effects start taking control. Is that the limit on Moore's Law?

Jim Keller, the legendary microprocessor designer, claims otherwise. Having been in charge of chip designs at AMD, Apple, Tesla, Intel and now running his own startup Tencent, he claims Moore's law cannot allude just to one factor. There are thousands of factors that play a role in dictating Moore's law such as material science, new architecture, software designs, etc. Each has its own diminishing return curves.

Credits: Jim Keller

Perhaps, some of the factors could lead to a complete paradigm shift in computing. The amount of data being generated has been on an exponential rise and one, if not all, of the following technologies, will prolong Moore's law:

Quantum computing, harnessing quantum effects

Using new materials such as graphene or carbon nanotubes in place of silicon

New computer architectures focused on specific applications of AI

Photonic computing

Today's focus is on photonic computing which has the potential to meet AI demands in terms of computation using optical processing technologies.

Electronic to Photonic Computing:

Information Processing has two parts: how fast data is transferred -Data Transmission and how fast we data is processed - Data Computation. Data transmission already occurs through optical cables using infrared light, allowing data to be transferred at the maximum possible speed. This could be extended to data computing. In other words, taking fiber optics and using it to build logic and CPU components.

The electronic revolution, powered by electrons, has allowed us to develop ever smaller and faster devices that have changed the way we communicate and store information. The photonic revolution, by harnessing the nature of light, will push the current limits of speed, capacity and accuracy with respect to data transfer and computation.

The movement of information in a semiconductor device today occurs in the form of electrons and the idea is to replace electrons with photons. How do electrons or photons travel? They travel in a wave motion in a material such as Silicon. While less energy is needed to transmit information in the form of photons, the wavelength of a photon is around 1000 times longer than electrons. This means that photonic devices need to be much bigger compared to electronic devices.

However, Arnab Hazari from Intel argued in 2017 that photonic chip sizes could be kept at the same size as electronic chips while delivering more processing power owing to two reasons:

a photonic chip needs only a few light sources from which light could be directed around

light travels at least 20 times faster than electrons

The benefits of photonic chips are huge. They can process data much faster and they require less energy. It is estimated that they can perform computations at terabits speed as opposed to gigabits through electronics, a 1000x factor.

Unlike an electronic circuit, where one needs millions of switches, photonic circuit uses interference patterns of waves to determine results. This could enable instantaneous computation by moving computation to data while it is in transfer mode. For example, photonic chips could be used in sensors that use glassfiber - to measure stress, tension or bow in the wings of an airplane.

Traditional computing occurs through serial processing, while photonic computing offers parallel processing by leveraging reflection. This reduces both complexity of operation and power requirement while offering high scalability.

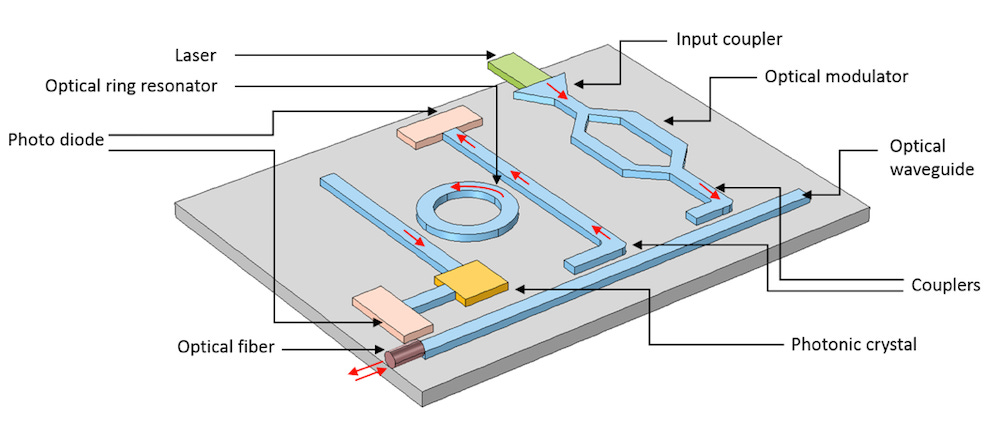

Just like transistors, resistors and capacitors are 3 building blocks of an electronic circuit, the 3 fundamental building blocks of a photonic circuit are:

Waveguide - a geometrical structure capable of propagating electromagnetic wave in a preferred direction in space

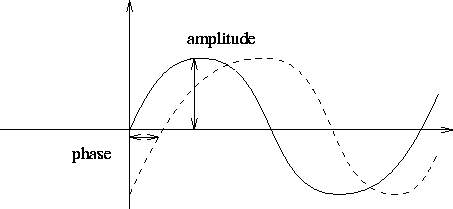

Phase - the relationship between two waves. If the peaks of two signals with the same frequency are in exact alignment at the same time, they are said to be in phase. Conversely, if the peaks of two signals with the same frequency are not in exact alignment at the same time, they are said to be out of phase. It can be measured in distance, time, or degrees.

Amplitude - Amplitude is the height of the wave and often related to power.

Credit: University of Edinborough

Light sources, Transmission media and waveguides, amplifiers, detectors, modulators, couplers, resonators - combine to form photonic integrated circuits. Although research has been going on since the 1960s, there has been great progress only recently in the field of optical computing. In the near term, hybrid optical-electronic systems will dominate. But to realize complete photonic systems, there are certain bottlenecks that need to be overcome.

Credit: Comsol

Bottlenecks:

One key component of any circuit is wiring. The exorbitant cost of replacing copper-based electric wiring in integrated circuits with cutting-edge photonics is a roadblock to commercialisation. Silicon Photonics, Indium-Phosphide and Gallium Arsenide materials are considered as potential options that could steer the market for photonics. Silicon Photonics for example is much lighter(100x), smaller and faster (1000x) than copper-based wiring to transmit the same amount of data.

Credits: HP

While photonic computing offers data to be computed in transition, accessing data still requires conversion from current electronic storage solutions(such as DRAM), there is extensive research going on in building optical RAMs that are much faster than DRAM or SRAM(CPU cache).

Even at a hybrid-level, switching between photons and electrons is a challenge that limits the potential of photonic computing.

Market Landscape:

Optalysys, a UK based startup, has developed the world’s first optical co-processor system, FT:X 2000. The company aims to enable new levels of AI performance through their optical processing technology, specifically focused on high-resolution image and video based applications. By using photons of low-power laser light instead of conventional electricity and its electrons, the company claims its technology is highly scalable while vastly reducing energy demands compared to conventional technology.

Teramount develops a photonic plug for optics-silicon connectivity. The photonic-plug enables standard semiconductor manufacturing processes and packaging flow to expand into silicon photonics.

Lightelligence, a US startup with 36 M $ in funding builds optical chips to process information with photons. Their applications are focused on optical neural networks and deep learning, while offering an enhancement in computational speed and power efficiency. Celestial AI (previously Inorganic Intelligence) is a competitor, incubated by The Engine MIT with 8 M $ in funding, that develop AI-inference chip based on optical neural network. Luminous Computing and Optelligence are other startups aiming to build photonics-based supercomputer on a chip that will handle workloads needed for AI at the speed of light.

Light Matter, another US startup, has developed a photonic processor and interconnect that offers a next-generation computing platform purpose-built for artificial intelligence by combing electronics, photonics, and new algorithms. Passage, an interconnect developed by Lightmatter, offers 1Tbps at a latency of 5 nanoseconds across an array of 48 chips compared to current optical fibers that offer 400 Gbps.

LightOn is a French company developing photonic computing hardware to boost training and inference of high-dimensional data for certain generic tasks in Machine Learning. The company's first generation core, Nitro photonic implements massively parrallel matrix-vector multiplications across dimensions up to one million are processed at once, within a single clock cycle. Zyphra, is a US startup in stealth mode that is developing a photonic processor based on probabilistic computing schemes.

On the transceiver front, DustPhotonics, an Israeli startup with 28 M $ in funding, develops and manufactures pluggable optical modules and solutions. Cisco acquired Accacia, an optical interconnect startup, for $2.6B and Luxtera for $660M, a US startup that uses silicon photonics technology to build complex electro-optical systems in silicon CMOS process of production.

Photonics is still a decade away from conquering the market but the promises shown by the technology is exciting.

Resources:

This Youtube series on computing gives a good overview of the history, present and future of the computing paradigm.